Blog

20180919-210142 (2018/09/19)

The work on modeller is going well. There was a lot of "invisible" work, like various fixes for geometry processing and proper handling of float inaccuracies in the clipping code. I haven't found any formulas for spheres when using 3D Bézier surfaces so had to iteratively find a good approximation. Apparently everyone doing 3D just uses NURBS surfaces instead, these are more complex, both to the user and the computer. Something that I want to avoid.

Using Bézier curves is pretty easy in 2D case, there are few simple rules to make the curves always smooth. In 3D it's more complicated, especially when you can stitch different patches together or parts of itself (like is the case with the sphere) creating more complex topologies. But I have figured out how to handle it with a simple constraint system.

Also designed how the texturing will work, again in 3D everything is more complicated. But have figured out all the necessary steps to make it work in a straigthforward way for both the user and the computer. The surface patches are providing nice automatic UVs. However the texels are non-linearly spaced in regard to 3D positions. I'm incorporating this into the internal workflow, basically using a very high resolution texture for storage and make the various paint tools to work with this non-linearity.

This texture handling will use own "database" approach to it, including on-disk cache for texture tiles to allow editing arbitrary sized textures. All the textures multiplied with multiple layers and different "channels" (albedo, heightmap, transparency and specular) will require to work with a lot of data. This is pretty much the same setup as in graphics editors such as GIMP.

The internal textures will be then rendered into desired levels of details. This applies also to the modeller usage, where you will just see some lower resolution view of the internal textures, but still editing at the full resolution (similar to zoom function in graphics editors).

20180821-074841 (2018/08/21)

Finished the GPU rendering part, now it renders the exact same output as the software renderer.

The general idea is that most of the effects (lights, ambient occlusion, some material properties) will be baked into static textures unique to the surfaces. The map file will contain all the texture layers used (including both generated projected textures and direct per-surface textures where needed) and the engine will be able to regenerate them as needed.

Same with the geometry, where the input will be various surface patches and the result will be nicely blended single mesh divided into a grid that will allow to process and update parts of the map as needed. Each cell in a grid will be generated in multiple levels of detail to aid rendering of more distant cells.

I've been inspired a bit by the various voxel engines, utilizing both the coarse approach (the grid that the geometry will be splitted into) and also on a micro level with the geometry processing to allow blending between shapes and average out too small geometric details as they would produce aliasing otherwise (putting the detail into a normalmap instead). This is similar to mipmapping for textures but for geometry. This will basically tie the level of detail of geometry and textures. The algorithm will not use actual voxels, just processing in a way that will produce similar result.

Now that I have all the needed pieces done I can work on the actual modeller based on Bézier surface patches.

20180811-213506 (2018/08/11)

Finished all the needed primitives for software renderer, next stop: adding 3D rendering on GPU so I can switch back to use mostly GPU for developing.

I'm keeping the CPU and GPU renderers in synchronization, the 2D rendering is already fully done and produces an exact output in both the CPU and GPU renderers. I have decided to use quite simple material "shaders", mostly relying on cubemaps to provide most material effects (this includes glass materials, glossy surfaces, general lighting on dynamic models, etc.). With the right combinations of inputs one can do wonders with such a simple setup :)

I've found that with proper filtering (actually during cubemap generation, no expensive operations needed during rendering) one can have cubemaps without any seams and actually the cubemaps can be really tiny for most glossy materials. This enabled to use just world-space normal maps and instead creating such cubemaps dynamically to provide the lighting for the dynamic objects (instead of computing the lights per-pixel).

The static world will use pre-generated lighting, mostly relying on raytraced ambient occlusion. I plan to do global illumination effects by manually placing extra lights, as I think it will be better than the automatic approach I've used in the previous engine. Due to the need to process lighting in HDR it tended to have washed out colors and basically was the same as a pure ambient occlusion. This will enable more artistic approach to the thing.

Speaking of HDR, similarly to the previous engine, it will use various fake HDR approaches as well. I don't like how with HDR you can't easily control the resulting image, also with the tonemapping operators the colors tend to be washed out and there are over and under exposures. I always felt that the HDR rendering simulates more a camera than a human vision. Unless you're observing extreme light conditions you generally do not see over and under exposures.

20180806-130821 (2018/08/06)

Created a better video recording approach by just recording the drawing primitives at the engine level. This allows to create nice 60 FPS videos from now on :)

The following video shows the GUI system, it is scalable without losing crispness (in fact it uses subpixel high quality antialiasing everywhere) so very well suited for productivity with the editor. The 2D rendering is shown in software renderer but a high-performance GPU rendering also exists that can render complex 2D shapes with the same 64x subpixel antialiasing.

The rich text panel is something that is not as optimized currently. I've ported it from some other project of mine because I thought it could be useful for some things (like integrated help in the editor). The majority of slowdown is because it redraws everything needlessly on change and also because all the text rendering is done by rendering 2D shapes :) This is because it uses subpixel positioning so it's not as easy to cache to images. For normal usage in GUI it performs well (esp. on the GPU version).

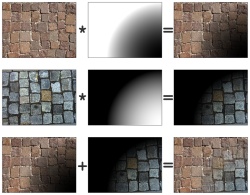

New technology: composite texture (2010/03/06)

It has been some time since last blog activity, more than it should be. It has two main reasons. The one is that I've been distracted with unrelated matters more than usual. The second is that I'm working on a new technology for Resistance Force's engine: something I called the composite texture.

|

|

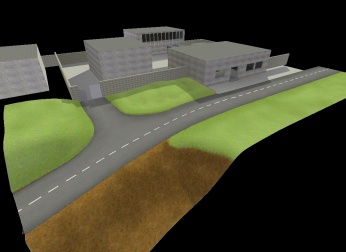

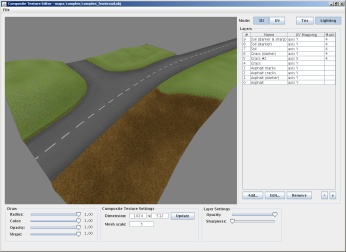

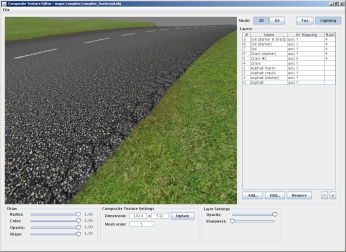

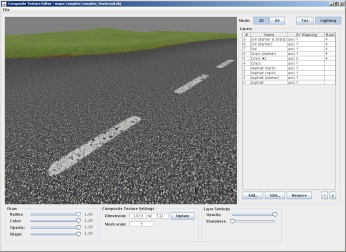

| Screenshot from the map editor with low resolution preview texture |

Composite Texture Editor - showing high resolution texture and used layers |

When I started working on extending the existing map and adding more details I've very soon ran across

a big problem with detail texturing of large areas like terrains. You can either repeat

some detail texture over and over (as done for regular "blocky" geometry, often called brushes), but

you'll lose control about any details. Or have one big texture for the whole area. But due to memory/speed

constraints you can't have really big textures.

Another interesting technique is MegaTexture developed by id Software. It allows you to have one really big texture for all geometry. The big texture is heavily compressed and stored on disk and dynamically streamed from it depending on what area player sees. The advantages are big: in engine it has practically constant performance characteristics so artists can go wild and touch any individual pixel they want to without worrying about anything. There are also disadvantages though: hugely increased amount of disk storage needed (even more for editing) and increased workload for artists, both not much indie friendly.

I've taken different approach for Resistance Force with composite texture: basically I took the idea of texture splatting and extended it by removing limit of number of detail textures (layers) and by ability to use it on any mesh, not just regular grid terrains. By having unlimited layers it also opens new possibilities such as placing any number of decals or even touching individual pixels by transforming some detailed area to decal and editing it in graphics editor. This way I have much more freedom when texturing while maintaining minimal disk storage and performance costs.

|

|

1. Unicornkill (2010/03/09 16:01)

Woups, you did it again!

This rocks!!!

I always hated texturing because it is so limited, this will really allow for some cool and easy effects and in general cut down texturing time by...alot.

Keep it up.

WYSIWYG in Resistance Force (2010/01/07)

WYSIWYG (What You See Is What You Get) is a major feature of Resistance Force. Originally

this term is used for describing text processors (like MS Word) that displays exactly the

same layout as the resulting output from printer. But this term also perfectly describes

one of goals for this game.

Actually it's not one feature, but more a philosophy that affects the game across several

individual features. For example in most FPS games there is distinction between player

model when seen from own eyes and when seeing other players (or yourself using 3rd person

camera). They are usually lower detailed "external" model that everyone can see (eg. in

multiplayer) and higher detailed weapon with hands only model. This is logical separation

for performance and detail reasons. But it also means that the models are not synchronized,

in fact the pose as shown by the weapon model doesn't match the external model at all in

most games.

In fact games cheat by displaying the weapon model over all other geometry so when you're

very close to a wall, your external model is actually intersecting it and visible outside

whereas your weapon model is just drawn over all other geometry so you never see that

you intersected the wall and it looks nice. This doesn't matter in single player game

and makes the life of developers much easier. But in multiplayer it's a big problem as

you reveal your position to enemy and what is worse in some games you can be even shot from the

other side!

The solution in Resistance Force is to have only one model that acts both for internal

and external view. This way you see exactly what others would see, even when you're

intersecting the wall. The fix is easy: when near the wall the player will just put his gun

down or up so he doesn't touch the wall. The nice thing is that when some bug (eg. in map

or game code) results in intersection of the wall the players will immediately notice and can

report the bug.

In Resistance Force the collision is always tested against the player model and no hit boxes are used. This leads us to next problem, collision detection in network game. The problem is that sending packets from one computer to other over network is slow. I don't mean the bandwidth but the latency (ping). Even on very fast connections it's still noticeable. The naive approach is to have "dumb" client that sends just the inputs and displays what it receives from server. The server has full authority over the game but due to latency the player doesn't see his own actions immediately.

For example, when you look left using your mouse, it prepares and sends packet to the server, the server processes it and send back new view position. The network communication must also handle packet loss and other not nice things that can happen when sending packets over internet. All the processing from moving the mouse to seeing the result on screen has very noticeable latency that results in some sort of motion sickness and is unplayable. Even when playing on LAN it's noticeable, though still acceptable.

The solution to this is to predict movement on server side and do the movement directly on client when pressing the button or moving the mouse. In most cases after the packet reaches the server it concludes the same movement and it's OK. There can be small inconsistencies here and there (due to slightly different position of players as seen on server side, small packet loss, etc.), in that case the server just corrects the client side position and everything is allright.

Most games are obsessive against fighting cheaters to the extent that it actually hurts gameplay for non-cheating majority. Just how many times did you hit someone, the blood was splashing all around and nothing happened? This is direct result of server authority over game and client prediction that is used in most games. This is just effect of latency, you hit someone, the packet is sent over the network and when it finally arrives to the server it's decided if you actually had hit something or not. But for good effect the client has to respond immediately by splashing the blood, otherwise it would look very unnatural. It has to splash blood even when there is no hit because the client can't tell it beforehand, it's not authoritative over the game.

Resistance Force is partially authoritative on client. The movement and stuff like grenades are handled classically, server is deciding stuff and client gets corrected if differs. But the firing is controlled by the client. This allows to have direct response because the client knows and can decide if the other player is being shot or not. This also helps greatly in lag reduction, in fact the lag issue is entirelly shifted from firing player to escaping player. This is good thing, when firing you're directly looking at your enemy (so having no latency is very appreciated), but when escaping you're usually not looking at the enemy so the latency issue is not (that) visible.

More experienced players immediately thought about how easy it would be to cheat in such system and they're right! But this is also easy to detect, so no issue here really. Most game creators are very obsessed about preventing any form of cheating, but they're not thinking in a larger picture. The reality is that preventing any possible cheating also hurts normal players and the cheaters still cheat anyway :) There are better ways how to deal with cheaters without hurting gameplay for normal players, I'll write more about it in some future blog post.

1. Silverfish (2010/01/07 16:51)

That was an interesting read! I'm looking forward to reading about how you will prevent cheating!

I also want to say that I REALLY appreciate your WYSIWYG approach, too many games don't do that, but now I know of two indie games that are taking that approach so it's pretty exciting. I hope the big companies will be inspired.

2. Unicornkill (2010/01/07 21:54)

Have you finished coding the server-side prediction?

Is this already implemented?

3. jezek2 (2010/01/07 23:08)

Yes, it was implemented long ago, otherwise the fully playable alpha version wouldn't work :) Actually it is not that complicated as it can sound.

4. Unicornkill (2010/01/08 08:58)

but it would take at least about 5 times as much processing capacity of your server, wouldn't it?

How do you see req server configuration for the completed version? How much players would it support?

5. jezek2 (2010/01/08 09:21)

There is practically no overhead for the client-side prediction (this is the official term, of predicting what will happen on server in future right now on client).

Actually it works by running the same game code as on server. The only thing that complicates it is when correction is applied to client. In other games it usually replays all actions from last acked state from server, but I took simpler approach of just setting the right position over time (so the correction movement is smoothed). Works well and has very little overhead.

Don't know yet about concrete requirements for server, but it won't consume too much CPU nor memory.

The maximum will be like 16 players on server (8vs8). More players is not much fun anymore with this kind of game. Also map sizes won't be that big, so having more players would have overcrowded effect.

6. Unicornkill (2010/01/08 17:13)

so you plan to 'keep' the general feeling of controlable battles we had in tce?

how about game speed? will you keep it at the same pace or would you make it faster? I personally found it very well balanced. That speed keeps it real and especially keeps all types of weapons usable.

7. jezek2 (2010/01/08 17:21)

Yes and yes, it will definitely stay "slower" :)

8. Unicornkill (2010/01/08 20:40)

super :p

now add a shotgun and a difficult to master akimbo and i'm hooked :p

well, a mapping tool would help too :p

darn, I gotta stay calm about this :p can't get too exited yet

Evolution of the game (part 1) (2009/12/13)

Today I was browsing through my screenshot archive and stumbled upon old internal

screenshots from early development versions of the game. They're quite interesting

and covering the rough development path. I've never intended to publish them publicly,

but by browsing them I thought what the heck! It would be fun to show you :)

When I started developing engine for the game in April 2007, I had pretty clear

idea how to create 3D rendering part of the engine based on some previous experiments

and theory gained over the years. So after month or two I already had pretty screens:

|

|

|

|

The lighting part in the old version of the engine was based entirelly on dynamic shadow maps and per-pixel lighting. Later I've found this doesn't scale well to bigger maps and more lights. I've realized most lights will be static anyway, so I later redesigned the engine to use static lightmaps that are smoothly blended with dynamic lights and shadows. This is not classic lightmaps, but more of light coverage maps. For each light there is separate lightmap consisting only of luminance. This approach allows to combine per-pixel lighting and lightmaps.

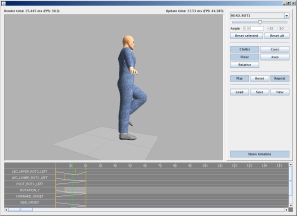

I'll show more about engine evolution in the following parts. In the meantime I was researching human model and how to animate it and create clothes. First approach was very ambitious. I've developed simple tool to load and animate MakeHuman's human model and animate it properly maintaing proper deformations and stuff. Part of it was also clothing. I've created cloth simulator as seen on the screenshots (February 2008):

|

|

|

|

Unfortunately it proved as futile attempt because of poor performance and complexity. The clothes system was not behaving well at every situation, biggest problem was that the clothes sometimes passed through the body and the simulation was ruined. So the idea was abandoned and I've created classic rigged model in Blender instead. However I've found later some techniques that are much cheaper and stable so it's still possible that some dynamic clothes would be implemented in distant future :)

This is all for now, keep checking the blog for additional parts :)

BTW, currently I'm working on grenades (along with minor bugfixes and missing features such as invert mouse or vsync) and planning next release hopefully next week or so.

1. Silverfish (2009/12/13 20:36)

Great post!

I always enjoy seeing a game evolve. It's stuff like cloth simpulation and IK that make games go from very good to awesome, I hope you'll find time to implement some stuff like that in the future!

Good to read you're working hard on the game also, I'm looking forward to the next release!

2. Nate (2010/01/04 09:05)

Interesting read. I look forward to feature blog posts!

3. Unicornkill (2010/05/01 14:06)

You might also want to limit the dynamic fabric parts to accessories.

eg you might have a headband's flaps, the belt of a sniper, grenades hanging on a jacket, a ponytail,...

How to help development (voice actor needed) (2009/12/01)

Since the release of alpha version some people asked if I need help with

development, mainly with modelling, mapping and stuff like that. The answer

is no, surprisingly.

One reason for this is that the tools and engine itself is not yet capable

of many things and the options are very limited now. You simply wouldn't be

able to deliver that much more than current content because the code

needed for it currently doesn't exist or there are still bugs which

I carefully avoided for public alpha release.

I work on Resistance Force iteratively, this means I do core things first,

eg. basic map, human model with all basic animations and game code needed

for that. When there is model and code for everything needed I can enter

new iteration and enhance existing stuff to be more detailed, map enlarged,

etc. This is not just adding content but also code into engine, game code

and also the editor. Everything is very connected and it has nice property

of unified look of everything.

Another reason is that I have some vision about things and would like to

put basic models and stuff myself so others would see where it's heading.

I plan to add modding support in future to allow community to create

custom maps, models, etc. But currently it's too early for that.

On the other hand we're seeking voice actor for voice chat feature. This is

predefined set of about 30 short messages. If you like the game and you're

interested please contact us.

UPDATE 2009/12/02: We've already found voice actor, thanks :)